Datasets

| Binaries/Code | Datasets | Open Source Software |

Video Segmentation Benchmark (VSB100)

|

|

|

|

|

|

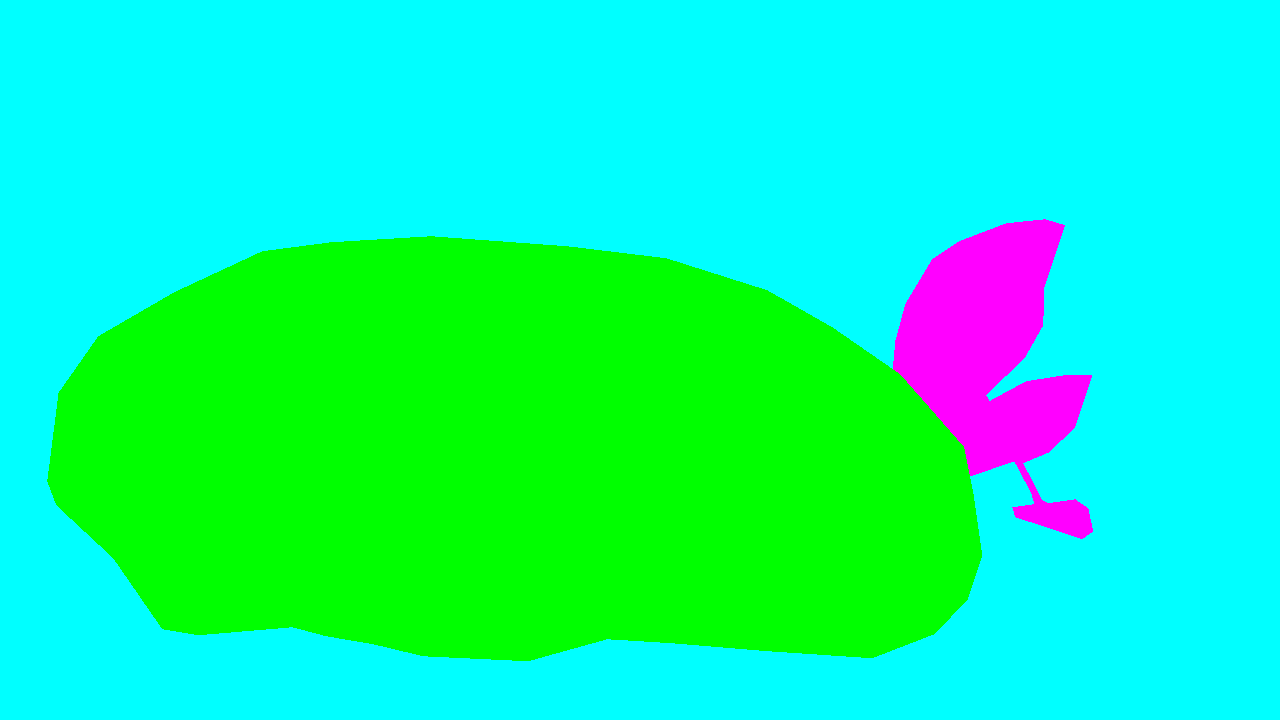

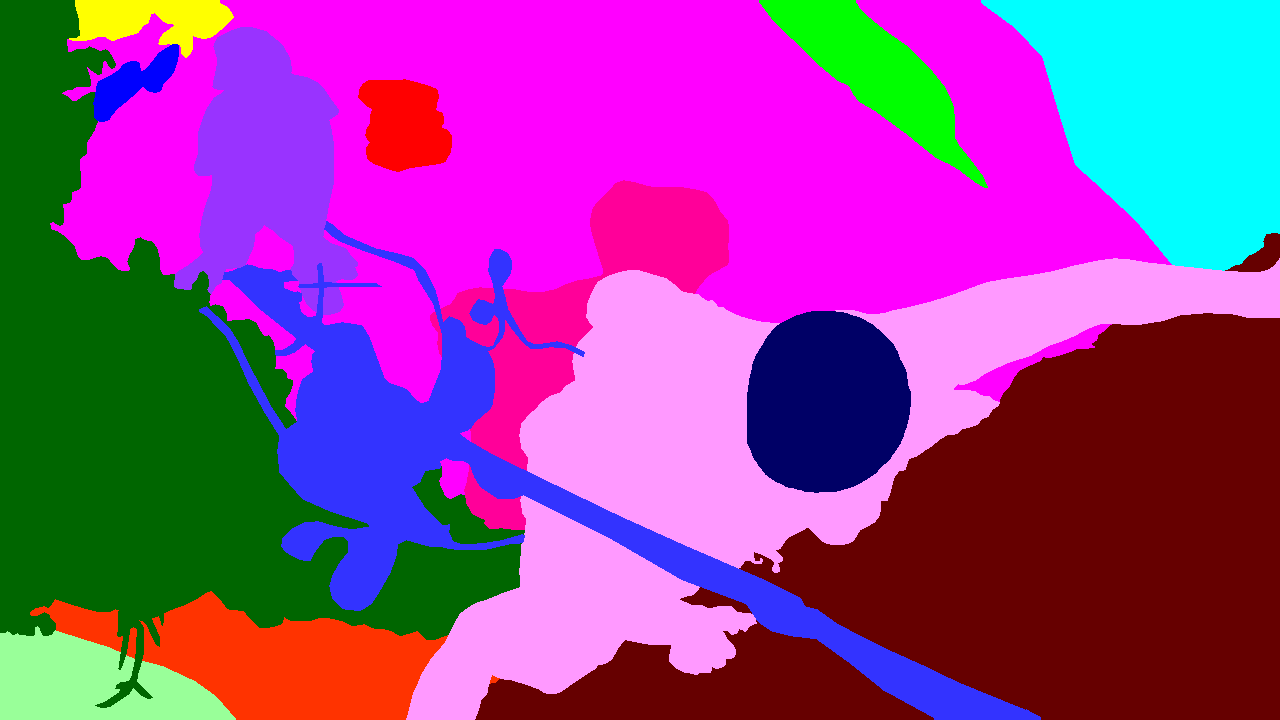

We provide ground truth annotations for the Berkeley Video Dataset, which consists of 100 HD quality videos divided into train and test folders containing 40 and 60 videos, respectively. Each video was annotated by four different persons. The annotation comes with the evaluation software used for the experiments reported in Galasso et al.

Download training set (40 Videos, external link to Berkeley server)

Download Test set (60 Videos, external link to Berkeley server)

Local links for Train and Test set

Train set

Test set

Download annotation

Full frame annotation - General Benchmark.Training set [Full Res] [Half Res]

Test set [Full Res] [Half Res]

Download evaluation code (ver 1.3)

Denser annotations for training set by Khoreva et.al, GCPR'2014: [Full Res] [Half res]

Subtasks

Motion - objects which undergo significant motion. Objects such as snow, fire are excluded.Training set [Full Res] [Half Res]

Test set [Full Res] [Half Res]

Non-rigid motion - subset of Motion subtask, objects which undergo signficant articulated motion

Training set [Full Res] [Half Res]

Test set [Full Res] [Half Res]

Camera motion - sequences where the camera moves

Training set [Full Res] [Half Res]

Test set [Full Res] [Half Res]

Mirror links of VSB100

[Mirror 1] [Mirror 2]Terms of use

The dataset is provided for research purposes. Any commercial use is prohibited. When using the dataset in your research work, you should cite the following papers:F. Galasso, N.S. Nagaraja, T.J. Cardenas, T. Brox, B. Schiele

A Unified Video Segmentation Benchmark: Annotation, Metrics and Analysis,

International Conference on Computer Vision (ICCV), 2013.

P. Sundberg, T. Brox, M. Maire, P. Arbelaez, and J. Malik

Occlusion Boundary Detection and Figure/Ground Assignment from Optical Flow,

Computer Vision and Pattern Recognition (CVPR), 2011

Contact

Fabio Galasso, Naveen Shankar NagarajaActivity on VSB and bug reports

We appreciate your consideration of our work. In order to keep the benchmark a lively comparison arena, we will maintain below a brief log of activities, bug reports and critics. Please email us for any comments.

06.08.14 parallelized benchmark code (code ver. 1.3): the estimation of the BPR and VPR benchmark metrics as well as the length statistics is now parallelized. The for loops in the functions "Benchmarksegmevalparallel" and "Benchmarkevalstatsparallel" use the parallel Matlab "parfor" command. Once multiple Matlab workers are initiated (cf. the Matlab instructions for parfor), every video is benchmarked in parallel.

01.08.14 temporally-denser training annotations around the central one, in a related GCPR 2014 work by Khoreva et.al: "Learning Must-Link Constraints for Video Segmentation based on Spectral Clustering" [pdf]. (Please consider citing also this work if you are using them.) The new annotations regard only the general task.

28.02.14 video naming and subfolders: the benchmark annotations and the source code of version 1.0 used a simplified naming for the BVDS videos. To reduce confusion we adopted the naming of the original BVDS. Note: the new code is compatible with the previous name notation and video structure, so you can keep using the (previously) downloaded labels with the new code. We are available to provide support.

15.02.14 we have corrected a naming issue (frame number) for the training sequence "galapagos", which only applied to motion and non-rigid motion labels.

13.02.14 bug report by Pablo Arbelaez: the ultrametric contour maps (UCM) which we computed from the annotations (just used in the BPR) were not thinned. That resulted in a slower boundary metric computation and slightly different BPR measures. We have now corrected the issue and will soon post an update of the BPR measures.