Video Segmentation

A major research focus in our group is video segmentation. One approach to video segmentation is by means of motion segmentation. Motion segmentation allows to retrieve object regions in a fully unsupervised manner. Another task is to propagate a segmentation given in one frame to the whole video. In 2014 and 2016, we co-organized a Workshop on video segmentation.

European Conference on Computer Vision (ECCV), Springer, LNCS, Sept. 2010

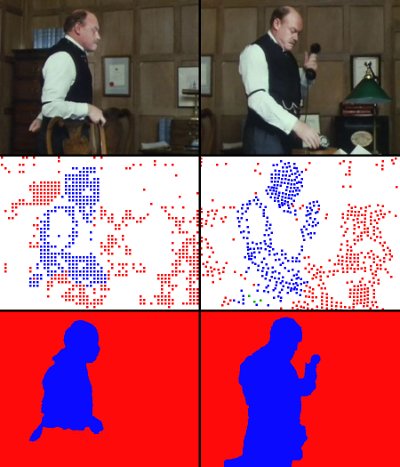

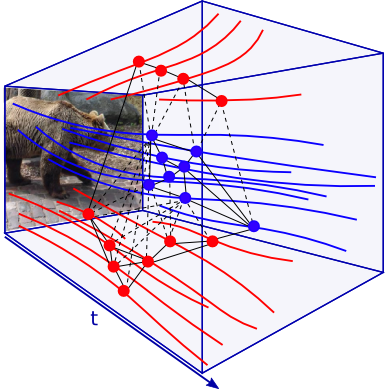

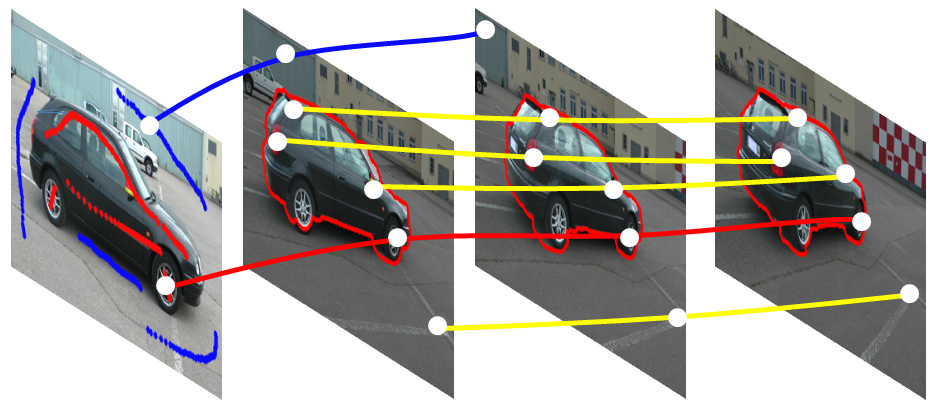

We found that the long term analysis of point trajectories rather than just two-frame optical flow is very powerful. The method can extract moving objects in a fully unsupervised manner from video shots.

IEEE Transactions on Pattern Analysis and Machine Intelligence, 36(6):1187-1200, 2014

We proposed an overall motion segmentation system that combines above clustering of point trajectories with a dense segmentation. We also introduced the Freiburg-Berkeley motion segmentation benchmark, a dataset with 59 videos and pixel-accurate ground truth segmentation annotation.

IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), 2012

We improved the previous technique by adding the capability to deal with scaling and rotational motion. This leads to spectral clustering on hypergraphs.

IEEE International Conference on Computer Vision (ICCV), Dec 2013

We further introduced a benchmark for general video segmentation, the VSB-100 benchmark.

IEEE International Conference on Computer Vision (ICCV), 2015

In this paper, we improved the trajectory clustering approach further as we exchanged spectral clustering by a multicut.

IEEE International Conference on Computer Vision (ICCV), 2015

In contrast to the purely unsupervised segmentation approaches above, this approach uses user input to guarantee good segmentations in all cases. We minimize the amount of user effort needed by coupling the user input with automated techniques that exploit the motion of point trajectories and color cues.

Demo videos on motion segmentation from point trajectories

We show some demos on videos from the Berkeley motion segmentation dataset. The left image shows the result after clustering the point trajectories for that video. The right images shows the outcome after color based post-processing, which yields a dense segmentation.