Block-Seminar on Deep Learning

apl. Prof. Olaf Ronneberger (Google DeepMind)In this seminar you will learn about recent developments in deep learning with a focus on images and videos and their combination with other modalities like language. The surprising emerging capabilities of large language models (like GPT-4) open up new design spaces. Many classic computer vision tasks can be translated into the language domain and can be (partially) solved there. Understanding the current capabilities, the shortcomings and approaches in the language domain will be essential for the future Computer Vision research. So the selected papers this year focus on the key concepts used in todays large language models as well as the approaches to combine computer vision with language.

For each paper there will be one person, who performs a detailed investigation of a research paper and its background and will give a presentation (time limit is 35-40 minutes). The presentation is followed by a discussion with all participants about the merits and limitations of the respective paper. You will learn to read and understand contemporary research papers, to give a good oral presentation, to ask questions, and to openly discuss a research problem. The maximum number of students that can participate in the seminar is 10.

The introduction meeting (together with Thomas Brox's seminar) will be in person, while the mid semester meeting will be online. The block seminar itself will be in person to give you the chance to practise your real-world presentation skills and to have more lively discussions

Contact person: Rajat Sahay

|

|

Material

from Thomas Brox's seminar:

- Giving a good presentation

- Proper scientific behavior

- Powerpoint template for your presentation (optional)

Papers:

Please send your answers to the questions to the corresponding advisor before the seminar day.The seminar has space for 10 students

| No | Paper title and link | Comments | Student | Advisor |

|---|---|---|---|---|

| B1 | Back to Basics: Revisiting REINFORCE Style Optimization for Learning from Human Feedback in LLMs | This is the RL approach that actually works for LLMs in practice. | Lyubomir Ivanov | Leonhard Sommer |

| B2 | DeepSeek-V3 Technical Report | State of the art Open Source LLM. We will select a part of the paper for presentation. | Vladyslav Moroshan | Jelena Bratulic |

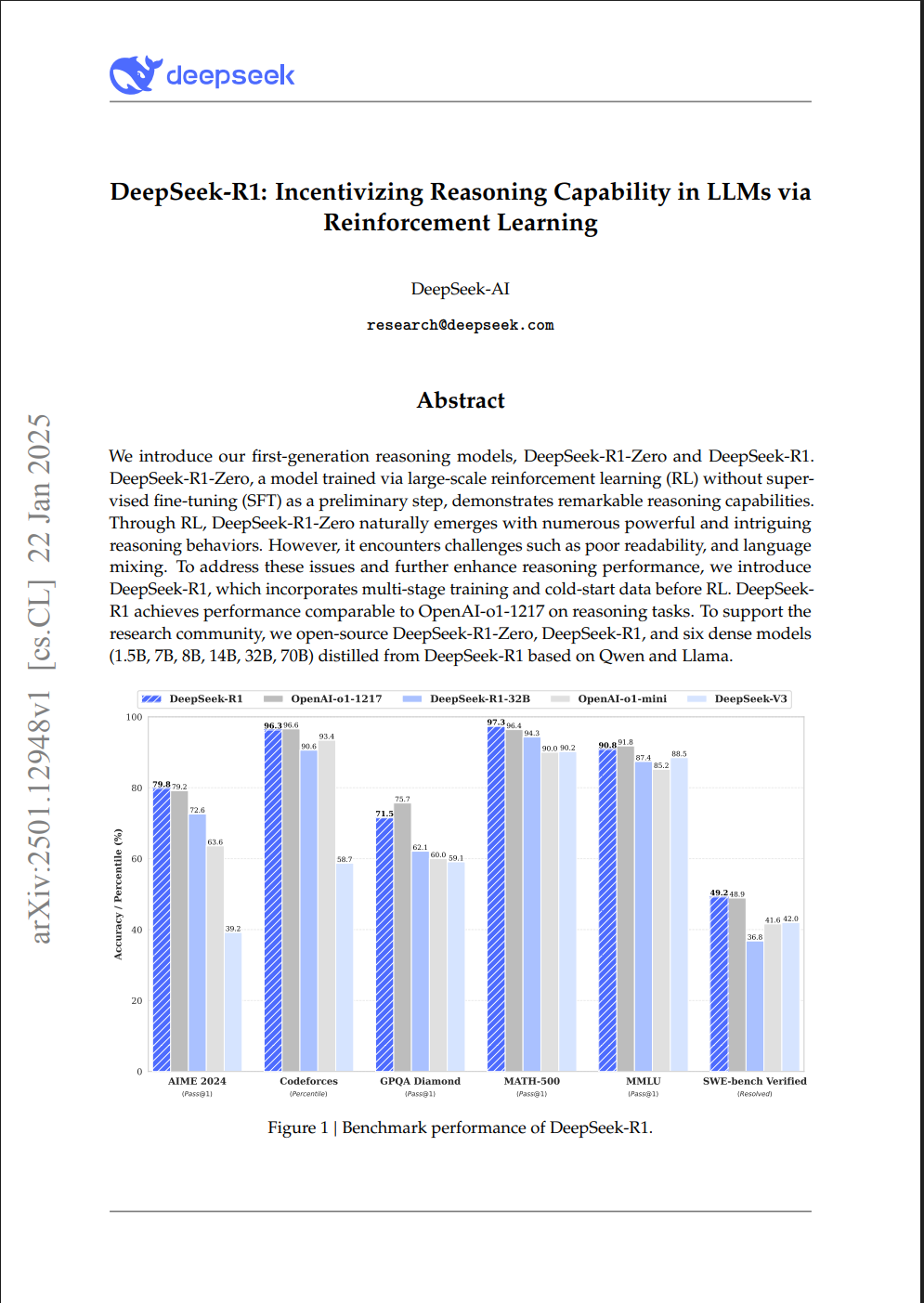

| B3 | DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning | Open Source reasoning LLM With a performance comparable to OpenAI-o1-1217 | Artin Sermaxhaj | Rajat Sahay |

| B4 | Qwen2.5-VL Technical Report | Another State-of-the-art Open Source LLM with strong vision capabilioties | Amal Abed | Jelena Bratulic |

| B5 | Gemma 3 Technical Report | Another strong State-of-the-art open source model | Simon Pfaendler | Simon Ging |

| B6 | SFT Memorizes, RL Generalizes: A Comparative Study of Foundation Model Post-training | RL helps to generalize | Daniel Rogalla | Karim Farid |

| B7 | Does Reinforcement Learning Really Incentivize Reasoning Capacity in LLMs Beyond the Base Model? | RL is the go-to technique to train the latest resoning models. Maybe it is not as transformative as people think? | Evin Joseph Bobby | Artur Jesslen |

| B8 | Intuitive physics understanding emerges from self-supervised pretraining on natural videos | Learning physics from videos only | Seif Saleh | Karim Farid |

| B9 | SigLIP 2: Multilingual Vision-Language Encoders with Improved Semantic Understanding, Localization, and Dense Features | A typical image encoder for LLM's | Hani Alnahas | Elias Kempf |

| B10 | Scaling Laws for Native Multimodal Models | Early or late fusion of the image encoder into the LLM | Josephine Chiara Bergmeir | Simon Ging |