Statistical Pattern Recognition

Prof. Thomas BroxStatistical pattern recognition, also known as "machine learning", is a key element of modern computer science. Its goal is to find, learn, and recognize patterns in complex data, for example in images, speech, biological pathways, the internet. In contrast to classical computer science, where the computer program, the algorithm, is the key element of the process, in machine learning we have a learning algorithm, but in the end the actual information is not in the algorithm, but in the representation of the data processed by this algorithm.

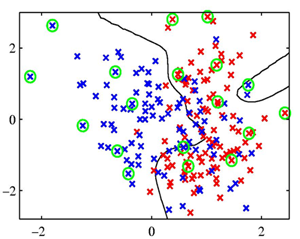

This course gives an introduction to the fundamentals of machine learning and its major tasks: classification, regression, and clustering. In the case of classification, we learn a decision function from annotated training examples (e.g., a set of dog and non-dog images). Given a new image, the classifier should be able to tell whether it is a dog image or not. In regression we learn a mapping from an input function to an output function. Again this mapping is learned from a set of input/output pairs. Both classification and regression are supervised methods as the data comes together with the correct output. Clustering is an unsupervised learning method, where we are just given unlabeled data and where clustering should separate the data into reasonable subsets. The course is based in large parts on the textbook "Pattern Recognition and Machine Learning" by Christopher Bishop. It puts emphasis on the probabilistic modelling, and thus goes a bit deeper into the fundamentals of machine learning than other machine learning introductory lectures. The exercises will consist of theoretical assignments and programming assignments in Python.

|

|

Slides and Recordings

| Date | Topic | Slides | Recordings | Solutions |

|---|---|---|---|---|

| 15.4. | Class 1: Introduction | MachineLearning01.pdf | MachineLearning01.mp4 | |

| 22.4. | Class 2: Probability distributions | MachineLearning02.pdf | MachineLearning02.mp4 | |

| 29.4. | Class 3: Mixture models, clustering, and EM | MachineLearning03.pdf | MachineLearning03.mp4 | |

| 6.5. | Class 4: Nonparametric methods | MachineLearning04.pdf | MachineLearning04.mp4 | |

| 13.5. | Class 5: Regression | MachineLearning05.pdf | MachineLearning05.mp4 | |

| 27.5. | Class 6: Gaussian processes | MachineLearning06.pdf | MachineLearning06.mp4 | |

| 3.6. | Class 7: Classification | MachineLearning07.pdf | MachineLearning07.mp4 | |

| 10.6. | Class 8: Support vector machines | MachineLearning08.pdf | MachineLearning08.mp4 | |

| 17.6. | Class 9: Projection methods | MachineLearning09.pdf | MachineLearning09.mp4 | |

| 1.7. | Class 10: Inference in graphical models | MachineLearning10.pdf | MachineLearning10.mp4 | |

| 8.7. | Class 11: Sampling methods | MachineLearning11.pdf |

MachineLearning11.mp4 |

Exercises

The exercise material is provided at a Github repository.There is an Online Forum for announcements, questions, and discussions.

See the Short introduction to git if you have never used git before.